AI Yi-Yi-Yi-Yi! Electronics X-ray Inspection and Artificial Intelligence

What are the questions we should ask before diving in?

To deliberately misquote and mangle Shakespeare once again, I come to praise AI, not to bury it, but does the potential evil it may do live after and the good oft interred in the dataset?

I apologize, but … discussion of the benefits of AI in all manner of applications has been the flavor of the month for much of the last two years, and there seems no end in sight! It has been one of the drivers of processor manufacture and use in recent times. However, two recent articles from BBC News seemed to highlight some pros and cons regarding use of AI for x-ray inspection and test.

The first1 describes how AI has been trained to best radiologists examining for potential issues in mammograms, based on a dataset of 29,000 images. The second2 is more nuanced and suggests that after our recent “AI Summer” of heralded successes on what could be considered low-hanging fruit, we might now be entering an AI Autumn or even an AI Winter. In the future, it suggests, successes with more complex problems may be increasingly difficult to achieve, and attempts are made only due to the hype of the technology rather than the realities of the results.

For x-ray inspection and AI, it is important to distinguish between what are, say, 1-D and 2-D AI approaches. 1-D AI, I suggest, is predictive information from huge quantities of data from which patterns can be far more easily discerned than by a human observer. It is based on individual data fields, such as those garnered from shopping and social media sites: for instance, a pop-up advertisement for something under consideration for purchase, or inferences on one’s political and societal alignments based on their social media selections and feeds. We may actively or passively choose to provide such information, and its predictive abilities may be construed as for good or ill.

In 2-D AI, identification and pass/fail analysis are based on images, as is required for x-ray inspection. This approach, I believe, raises whole levels of complexity for the AI task. Thus, I have the following questions about how 2-D AI will work with the x-ray images we capture for electronics inspection:

- What is, and should be, the minimum size of the image dataset on which board analysis can be relied? Is there a sufficient quantity of “similar” images from which the AI can build its “ultimately opaque to the user” algorithm on which results will be based? Adding images to the dataset will modify/improve the AI algorithm (more on that in a moment), but how much time is acceptable for human operators to grade the AI results to train/improve the dataset, especially if multiple datasets will be required for different inspection tasks, including analyzing the same component at different board locations?

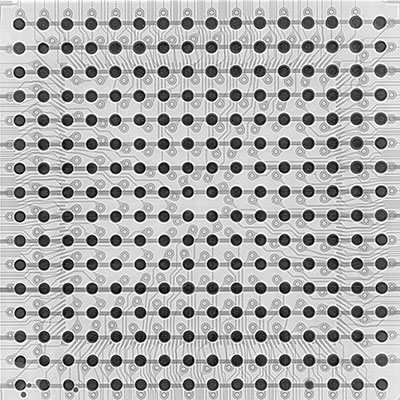

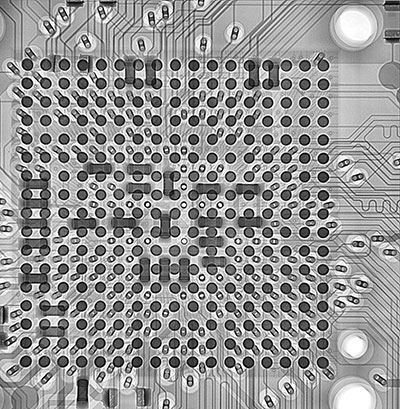

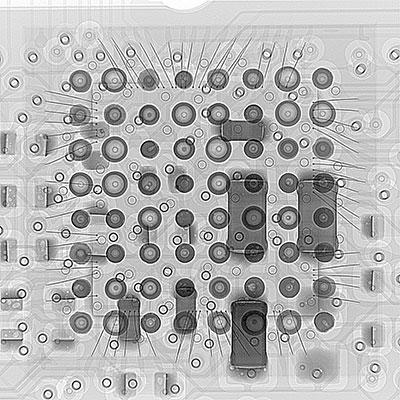

- How complicated are the individual images in what they show? FIGURE 1 shows an x-ray image of a BGA on a simple board with little internal board layer structure and no second-side components. Compare this to FIGURE 2, a more “real” situation seen in PCB production, yet still relatively simpler than much of what is manufactured today. Finally, compare both images to FIGURE 3. Although the fault is clear to the human eye, the additional overlapping information from other parts of the board certainly makes human analysis of this BGA more challenging. If there were only a single, subtle solder ball fault present in Figure 3, then perhaps an AI approach might fare better than a human, given sufficient examples. With this in mind, are there sufficient board quantities to produce an acceptable dataset of images? It is probable applications like those shown in Figure 1 are most appropriate for AI today, as there is likely to be much more simplicity in each image, and the AI can obtain many similar examples from which subtle variations not observable to the human eye may be found. As for Figures 2 and 3, will new image datasets be needed for analysis of the same component when it is used in different locations on the same board or on entirely different boards? How often might an AI algorithm need to be (re)verified?

Figure 1. Simple image of a BGA?

Figure 2. Less simple image of a BGA?

Figure 3. An easy image for human and AI identification of the issue? Do the second-side components, wires, movement of components relative to each other during reflow, etc. make it more difficult to analyze if only a single, subtle failure is present?

- What is the quality of the images in the dataset? Will the (potential for) variability in the performance of the x-ray equipment hardware and software over time impact AI algorithm performance? The typical x-ray detector used in x-ray equipment for electronics today is a flat panel detector (FPD). These silicon CMOS array devices already include small quantities of missing (non-working) pixels from their manufacture that are interpolated by software methods so as not be seen in the final image. Can/do the number of missing pixels increase with time and use of the FPD? If so, how often should a missing pixel FPD correction algorithm be run? Once run, does that impact the AI algorithm and question/affect previous and future results? Does x-ray tube output variability, if it occurs over time, affect the AI algorithm because the image contrast could vary? Can different x-ray equipment be used with the same AI algorithm? If you add images from multiple x-ray systems into the same algorithm, does this affect it in a positive or negative way? What might be the effects of other wear-and-tear on the AI algorithm, and component replacement (e.g., PC) on the x-ray system taking the images? Repeated system image quality checks should mitigate these issues, but how often?

- Is there a possibility of bias of/in the dataset? Perhaps this is more of an issue for facial recognition than when looking at a quantity of boards, but if you are running the same AI algorithm on multiple x-rays systems and all are adding to the learning dataset, does that necessarily mean the test always gets better, and could the dataset become biased? When bringing a new x-ray system online for the same task, do you need to keep a reference standard set of boards upon which to create a “new”AI algorithm if you cannot “add” to an existing one? Are you able to even keep a set of reference boards in case the algorithm is lost or modified from its previously successful version if conditions change? If there is a new algorithm created on a new, additional x-ray system, either from an existing or a new supplier, are any of these truly comparable to what you have done before?

- What about the fragility of the underlying images themselves, ignoring any equipment hardware changes or variability? It has been shown that deliberately modifying only tens of pixels in a facial image can completely change its predictive success within an AI dataset, modifications that would not be seen by a human operator. For example, at the recent CES 2020, at least one company offered to seek to frustrate facial recognition checks with a program that deliberately makes minor changes to photos to prevent people from being recognized.3 While deliberately adulterating x-ray images of PCBs is extremely unlikely, could degrading FPD cause sufficient pixels to be changed such that the results are not recognized by an existing AI algorithm, especially if running the same inspection task over months and years? As suggested above, frequent (how often?) quality and consistency checks on the image output should help mitigate these fears, but how often are they needed, and how does that impact inspection throughput?

- Ultimately, do any of my questions matter? If you get, or appear to get, a “good” automated pass/fail result, then does the method used matter? If so, can you be confident this will always be the case for that board analysis in the future?

As a comparison for electronics x-ray inspection today, consider the BBC example of analyzing mammograms.1 They use a large number of images, I assume of similar image quality, on fields of view that are, I understand, painfully obtained to attempt to achieve more consistency through making a narrower range of tissue thickness across the dataset! In electronics applications, do we have a sufficient quantity of similar images for our board/component analysis AI to produce a reasonable test? Is there more variability in the positional and density variation possible for our electronics under test compared with a mammogram?

What does this mean for x-ray inspection of electronics? Already many equipment suppliers are offering AI capabilities. But what are the questions we should ask about this amazing technology and its suitability and capabilities for the tasks we must complete? We don’t know precisely the algorithm used for our tests. We are not certain of having sufficient (and equivalent) images or how many are needed. Adding more over time should give better analysis – a larger dataset – but are they consistent with what went before and, if not, are they materially changing the algorithm in a better way, indicating some escapes may have been made in the past? Sophistry perhaps. But if we do not know what the machine algorithm is using to make its pass/fail criteria, are we satisfied with an “it seems to work” approach?

The more complicated the image, the larger the dataset needed to provide a range of pass/fail examples with an inspection envelope. Variability of the mammograms and the 29,000 images used may well lie within a narrower variation envelope than the BGA in Figures 2 and 3. Perhaps AI for electronics is best suited today for where the image is much simpler and small, as in Figure 1. Automatically identifying variations in the BGA solder balls would naturally be assumed to be better undertaken by the AI approach, but does the variability of the surrounding information affect the pass/fail AI algorithm? Add the potential for movement of components during reflow, warpage of boards, etc., and we have more variability in the placement of the features of interest, perhaps requiring a larger image dataset to cover this larger range of possibilities. Then consider adding an oblique view for analysis and these variabilities further enlarge the envelope of possibilities. How many images do you need to get an acceptable test: 100, 1,000, 10,000, 100,000? A bad test is worse than no test, as you are then duplicating effort. Will you get that many images in the test run of the boards you make? And, outside of cellphones, PCs, tablets and automotive (?) applications, is there sufficient product volume to obtain the necessary dataset? If AI tests are applied for automotive applications, is there a complicated equation for quality of test vs. sufficient volume of product vs. complexity/quality of image over time vs. safety critical implications, should an escape occur?

I have asked too many questions and admittedly do not have the answers. I hope the AI experts do and can advise you accordingly. Perhaps the best approach now is to use AI if you are comfortable doing so and consider the images you will be using for your AI test. The simpler the image, the quicker and better the results perhaps, but does that describe the imaging problem you want your AI to solve? Consider the opportunity for using PCT to obtain images at discrete layers within a board’s depth to improve the AI analysis by decluttering the images from overlapping information. However, are you at the right level in the board if there is warpage? And are you prepared to take at least 8x as long (and perhaps substantially longer) for the analysis? Because a minimum of eight images will be needed to create the CT model from which the analysis can be made.

There are definitely x-ray inspection tasks in electronics for which AI can be considered and used today. Is it your application? Or is it as Facebook AI research scientist Edward Grefenstette says,2 “If we want to scale to more complex behavior, we need to do better with less data, and we need to generalize more.” Ultimately, does any of this matter if we accept that AI is only an assistant rather than the explicit arbiter of the test? AI as the assistant may well be acceptable if the confidence level of the result matches or is better than human operators. However, can or should AI be used without such oversight today, or even in the future, based on what can be practically achieved? Whatever the decision, I predict this is likely to give metrologists endless sleepless nights!

References

1. Fergus Walsh, “AI ‘Outperforms' Doctors Diagnosing Breast Cancer,” BBC News, Jan. 2, 2020, bbc.co.uk/news/health-50857759.

2. Sam Shead, “Researchers: Are We on the Cusp of an ‘AI Winter’?,” BBC News, Jan. 12, 2020, bbc.co.uk/news/technology-51064369.

3. D-ID, deidentification.co.

Au.: Images courtesy Peter Koch, Yxlon International

, is an expert in use and analysis of 2-D and 3-D (CT) x-ray inspection techniques for electronics; dbc@bernard.abel.co.uk.

Press Releases

- Altus Partners with Sellectronics to Enhance Manufacturing Capabilities with PVA Delta 8 System

- ITW EAE Celebrates Major Milestone in Domestic Manufacturing Expansion

- Micro Technology Services Selects PBT Works CompaCLEAN III for Advanced PCB Cleaning Performance

- Indium Corp. is Electrifying the Future with Advanced Materials Solutions at Productronica