A History of Placement Programming and Optimization

A new approach to production planning models specific SMT machines and lines by automatically parsing products into groups.

Since the start of SMT production, the optimization of machine programs has been one of the most interesting challenges in software engineering. With the amount of flexibility available from today’s machines, optimization is so complex that even the latest supercomputer would take months to find all possible sequences of placement. SMT program optimization must complete in minutes or even seconds to be practical and useable, and so a much more intelligent approach needs to be taken. Let’s look under the hood at SMT placement optimization algorithm strategies, building up in layers from the basic “random walk” exercise, through common feeder setups and line balancing, to finally consider where it has all gone wrong and look at a completely fresh approach.

Stage 1: The moving PCB. The earliest SMT placement machines inherited bad habits from their through-hole predecessors. These machines had an insertion mechanism that was bulky, so the PCB would have to move around while the insertion mechanism remained static. Insertion machines followed a mechanical cycle that controlled the movement of mechanisms at various times. For programming the sequence of insertion, this provided the time constraint during which the PCB, mounted on an x-y table, could move. If the machine cycle time was one second, then probably 0.6 sec. of that was the part of the cycle that the mechanism was clear of the PCB, permitting it to move. Measuring the acceleration, speed and deceleration of the x-y mechanism that could take place within that 0.6 of a second gave the maximum distance that the PCB could move without requiring the machine to pause in its cycle. If all insertions could be done within this minimum distance, the machine program sequence could be said to be 100% efficient. The optimization problem with moveable x-y tables was not strictly a “random walk” exercise to find the minimum path between insertions, but one that avoided paths which were longer than a set distance. When there are hundreds of insertions spread randomly across a large PCB, it can be quite a challenge to do manually. It is easy, though, to make a computerized optimizer that considers every possible path, and simply choose the best. Unfortunately, as the number of paths increases geometrically, just a few hundred insertions can create billions of possible paths needing to be calculated and considered, which would keep even the fastest PC busy for a long time.

Instead, algorithms were developed that would try to reduce the number of calculations necessary. One effective method was a form of “clustering,” in which all points that could be joined in a single line without loss were processed first, forming “strings” of 100% efficient sequences. The endpoints would then be joined, finding every way possible, which reduced the amount of calculation normally to a few seconds, and usually with a decent solution, but it could not be guaranteed to find the best result. A process developed that smoothed the last three or five positions on either side of the joined ends of each string improved on the losses incurred between each cluster. This is a simple example of a heuristic algorithm of the period. Within a heuristic algorithm the rules are fixed, however, which brought criticism that opportunities to consider potentially better solutions outside of the rules did not work.

Another family of optimization algorithms was developed called genetic algorithms (GAs). In this case, all points were initially joined to make the sequence in a random way. A software function would then take the sequence and score how good it was, which in the case of program optimization was to calculate the sequence execution time on the machine as accurately as possible. The sequence was then split in a semi-random way, and rejoined in a different way, hence the genetic reference. The new sequence was then measured, and if better than the previous one, it was replaced. This process was then repeated over and over until no more improvements could be made. The rules governing how the program sequence was split and rejoined each time were different in every variation of genetic algorithm that evolved gradually over many years, as they became key topics of interest academically. Even the best GAs cannot promise to find the best solution, but it was thought they would be able to find good solutions, and given enough time, would find excellent solutions, giving the most impatient person the option to take a reasonable result after only a few seconds, or at the other extreme, let the optimization run overnight to get a far better result. Although there was a lot of competition between algorithm developers in the early days of SMT, the best heuristic algorithms could usually find more efficient sequences compared to the early GAs over shorter periods.

One advantage of a genetic algorithm is that it can be adapted relatively easily to increasingly difficult challenges, simply by changing the function that calculates the program sequence time. Going back to the insertion machines example, it was not just the PCB that was moving. For a jumper wire machine the pitch of the wire could also change, which also had a maximum pitch that could be changed per cycle without waiting time affecting the cycle, which needed to be considered in parallel to the x-y table movement. Eyelet machines had two insertion mechanisms working simultaneously, so there was an offset of x distance to be considered in every second placement. There were then axial and radial insertion machines, where parts had to be picked from a moving feeder table, again with a similar maximum efficient movement per cycle. The limitation of the feeder table was soon found to be the most significant constraint to optimization, which heuristic algorithms focused mainly on, with clustering of feeders as the primary optimization driver above other variables.

The ultimate pin through-hole machines came in the form of a variable pitch axial, which could move the x-y table, a feeder table, the pitch, and issues relating to rotations, all of which had to be considered in parallel to make the final optimized machine program. This pattern of heuristic sequence optimization was inherited by the early SMT machines, the so-called turret machines where the PCB x-y table and feeder table moved in the same way as for through-hole insertion. Some new constraints came with the SMT machines, however, in that the pickup of materials from the feeders was performed some cycles in advance of the placement because of the number of parts on the rotating turret at any time. Turret machines also had variable speeds of placement and PCB movement. The placement speed of the machine was reduced to the slowest part on the turret at the time, meaning that mixing speeds within the sequence was inefficient. The PCB speeds were a one-way problem; where once the speed had been reduced for any part, the x-y table speed could not then be increased. Heuristic algorithms became increasingly complex, requiring months of development and years of fine-tuning. GA-based optimization coped more easily, although the time needed to find acceptable solutions increased, which was offset greatly by the progressive increase in the speed of PCs.

Stage 2: Moving heads. Turret-based SMT machines were designed to run at high speeds, which for larger SMT materials was quite a challenge because the turret would have to slow to a crawl to prevent heavy parts flying off and to permit time for visual recognition of complex lead patterns. In parallel, pick-and-place SMT machines were developed, where the PCB and the feeder table were static and the placement head moved on an x-y axis. Once again, the optimization algorithm started out simple, only considering the path needed to drive the head to pick up materials and then go to the placement point on the PCB. The main variable element was again from the material positioning on the feeder table. Materials located at the ends of the table required more head travel and hence more time. In heuristic algorithms, priority in deciding material setup positions was based on how many of each material were used on a PCB. The best material position was calculated from the centroid of the placement points; although in reality, the head could move in x and y simultaneously, meaning that however far the placement was from the feeder tangentially, which was unavoidable, that same distance could be travelled along the feeder table. This formed the definition of which paths were executed with or without needless loss and were 100% efficient. GAs adapted accordingly using similar timing assessment rules.

In a short time, however, pick-and-place machines became more complex. Some materials needed to go to a camera for recognition, a detour on their journey from feeder to placement. Sometimes suction nozzles on the placement head needed to be changed during the production cycle to place different part sizes and shapes, which also needed to be minimized. Trays of materials were introduced where many parts could be picked up from one tray location, with a stack of trays moving in and out of the pickup position. Lead time between the setup from one tray to another could cause avoidable loss if the time between successive picks was too short. Although a huge effort was made to make the pick-and-place mechanism as fast as possible, much time was always going to be consumed moving the head to the feeders and then back to the PCB for each mounted component. The initial solution to this issue was to pick and place several parts at once. Various multi-head mechanisms came from different machine vendors, the simplest with heads in parallel. This meant up to four parts, for example, could be picked up simultaneously and then placed one by one. This only worked if the materials were placed on the feeder table in the exact position that matched the pickup heads, and even then, perhaps not all four parts needed to be mounted for each pick. However, this method often worked and was effective at reducing overall placement time, but it brought significant challenges to optimization. Heuristic algorithms had to work out the possibilities for these “gang picks” in advance and accommodate the rest of the logic around it. The GAs only knew of the opportunities of the gang pick as a result of a suddenly reduced time of a particular sequence. It often happened that the specific combination that used gang-pick was not found by the GAs for quite some time. More flexible multi-heads that could rotate in certain ways to pick up multiple parts from any feeder position were then developed, which could be used a lot more of the time and were a lot less difficult for GAs to discover.

The next challenge for optimization came with machines that had two placement beams operating in parallel on the same x-y table at the same time. In effect, two parallel placement sequences needed to be made, so that as well as being efficient each sequence would have to avoid cases where beams would have to wait for each other as they crisscrossed the PCB. Allocation of materials between the two sides of the machines corresponding to the two heads was critical to ensure the time for each side would be balanced. This meant several iterations of sequencing were needed by the heuristic algorithms as different material allocations were tried.

For GAs, the machines are still considered as a whole, although with the increased complexity, the time needed to find the combinations that work well increases. More recently, optimization for pick and place has grown even more challenging, to support machines with multiple stages of positions where PCBs can stop and multiple sets of pick-and-place robots, such as in modular machine designs. For modular machines, the time loss of the travel of the heads is almost negligible because materials are positioned within a relatively narrow module. However, the allocation of materials between the different modules is critical because the balance of the work done by each module needs to be the same to keep all modules of the machine working at all times.

Stage 3: Moving materials. The problem of material allocation across modules in a machine is similar to the allocation of materials between independent machines connected in a line. In the modular case, all modules belong to the same platform and can be optimized within the same algorithm. When different machines are used, especially if they come from a different platform or vendors, each machine has to be optimized separately, with many iterations then needed to try different allocations of materials between each machine to balance the workload between them, causing the whole optimization process of each machine sequence to be repeated many times. This can be a major area of compromise with mixed vendor lines when using the machine vendor’s own software for program optimization because material iterations need to be done manually and painstakingly; thus, probably only one or two iteration cycles is practical. A third-party software optimization provider within an overall SMT process preparation package will be able to do these iterations automatically, bringing an effective and automated optimization solution.

Whether using machines from the same vendor or from different vendors, each machine optimization has to be repeated many times for each machine. Poor line balance, where the workload assigned to each machine differs by only a couple of percentage points, with the line only being as fast as the slowest machine, can result in serious throughput reduction. This is quite visible with connected independent machines, but is also just as important inside modular machines where the idle time of individual heads and modules is a lot more difficult to see. Line balancing becomes exceptionally difficult to model in the cases where machines provide two or more lanes, in which different PCBs can be produced together on the same machine at the same time. The required placements are then built up as the combination of placements on each PCB, even though any combination of PCB may be physically in place at the time, meaning only a part of the full sequence may be executed. Because of variation in the execution, there is also variation in the balance of materials used and line balance loss.

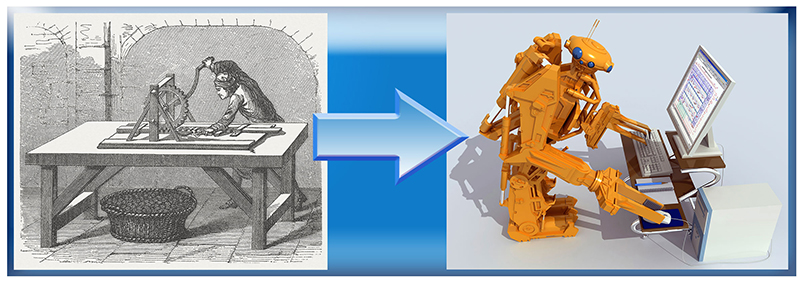

Figure 1. Placement programming and optimization have been evolving, but the journey is not over yet.

Stage 4: Changing products. For high-volume production, optimized machine programs once ran for hours, days, or even weeks without change, and so program optimization was crucial. In almost every case today, the number of different products made in any SMT factory exceeds the number of production lines available. While SMT machines have become faster, lot sizes of each product have become smaller. This has meant in many cases that the time taken to change material setups on machines between products incurs vastly more overall loss time than time loss in an optimized SMT machine program. The concept of creating common setups of materials reduced or even eliminated the changeover time. The programming engineers would group together a set of normally related products that used similar materials. The position of materials setup on the machines would then be fixed across the group of products so that any product could be made against the setup with few or no material changes. In practice, often more different materials were required for the group than space available on the machines. In such cases, most materials could be common, but some would have to be changed between products. These changes would often be restricted to one area of one machine in the line, preferably where a single trolley of materials could be easily swapped out between products. Although this strategy is effective at reducing or even eliminating changeover times, other losses are created as a result.

As we have seen, the ability of SMT program optimizers to avoid loss is predominantly based on the position of materials on the machine. With common setups of materials, the opportunity to tailor this for each product is removed. The initial choice of the material setup positioning is crucial to minimize losses that will be inevitable across all products in the group. This is often done by basing the fixed setup based on the highest running product in the group or, in more advanced cases, by creating a virtual product that has a representative superset of placements that represent each product in the group to differing priorities according to the expected quantities of each product. Inevitably there is compromise, commonly with an overall reduction of program efficiency as much as 25%. This situation becomes worse over time as products within the group become obsolete and new ones added, with sometimes 50% efficiency or more lost. This can still be acceptable if lot sizes are very small. The issue remains that, either way, machines are producing a lot less than they are capable of, and increased machine program optimization is unable to make any significant difference.

Stage 5: Changing requirements. With so much work involved in the creation of SMT machine programs with common setups, the likelihood is they will remain in use for some time. However, as the actual delivery requirement for the factory changes over time, the balance of demand can shift between the prepared groups of products, leaving some lines unable to achieve target with one group, while others are standing idle with theirs. Whatever assumptions SMT programmers made about the grouping of products, the effectiveness of those decisions can rapidly degenerate, especially in the current climate of increasing flexibility in demand to the factory as stock is reduced in the distribution chain. There is no “win” situation; thus a fundamental flaw in the approach to SMT optimization is exposed.

Stage 6: A new approach. A new approach to SMT optimization is needed that effectively turns the whole process upside down. Starting with the near-term need of the customer, the creation and sequence of work-orders should be done first, permitting any capable line to make any product, which ensures that both the customer need will be met and the mix of products will be divided across all available lines, keeping them busy. This schedule optimization cannot be done in isolation; we know the creation of product groups and assignment of common material setups is essential to ensure minimum loss at the inevitable product changeovers. The solution is to use a finite production plan tool that can provide the work-order sequence optimization and can model specific SMT machines and lines with the automated selection of products into groups. Once the schedule and product grouping has been done in this way, the SMT process preparation or machine vendor specific optimization tool can take the group setups and perform the specific machine optimization processes.

Optimization done in this order transforms the way in which constraints are treated and affects the optimization process. The calculations and decisions made for grouping products are done without the need for underlying optimization of machine programs, with modeling that is precise enough to follow the exact machine specification and capability. Then, the production plan tool can optimize several orders of magnitude faster than machine-program-based grouping tools, permitting it also to consider a wide scope of grouping optimization; for example, thousands of products and work-orders over all production lines in the coming month or whatever period is needed. The SMT machine program optimization would also be faster because the feeder setups have already been decided. The final optimization is down to the machine level, which can be completed in just a couple minutes. Put together, the production tool can be run much more frequently than any other scheduling process before it, and changes in schedules would be easy to execute from the product portability standpoint. Losses in the machine sequencing following the grouping made by the production plan optimization are usually no worse than those made by the machine optimization tool, and the line dormancy and performance degradation issues, which were the major cause of lost productivity, are completely removed.

Stage 7: Enjoy the benefits. The combination of production plan optimization and process preparation or machine vendor SMT optimization tools, working together in the right sequence, ensures the factory is always making the right products needed against the delivery plan. It also ensures product grouping is effective and can be changed effortlessly in line also with the changing delivery needs. SMT program optimization once again becomes important because the machines are now working in a way that approaches high volume, where every second of saved program loss time and every percentage point of productivity matter. A new balance of performance and flexibility is achieved, with tools now providing the maximum effectiveness that they have been designed to.

SMT machine placement programming and optimization have come a long way over the life of SMT production, but now what’s needed is a reversal in the process flow of production planning, which had been compromised by the grouping decisions made by the machine program optimization software and were not reflective of production needs. Now, SMT programs can be optimized in line with production plans that are fully optimized with production plan software specifically for the SMT environment.

is senior marketing development manager, Mentor Graphics (mentor.com); michael_ford@mentor.com.