Designing a Robust Industrial Augmented Reality Solution

A review of tracking methodology choices to address challenges of environmental factors such as light and the prerequisite of fixed visual features.

The future of manufacturing will include elements of augmented reality (AR). As Pokémon GO and Ikea Place apps continue to drive awareness for AR, technology companies continue to develop solutions to solve key productivity, quality and efficiency challenges using AR. Manufacturers are looking for innovative ways to solve problems, and AR may be the key. According to an article by Cognizant, innovative companies such as Ikea, Mitsubishi, Toyota, Lego, and 10% of Fortune 500 companies have begun exploring augmented reality applications.1 In addition, Gartner predicts that by 2020, 20% of large enterprises will evaluate and adopt augmented reality, virtual reality and mixed reality solutions as part of their digital transformation strategy.2,3

Augmented reality has been around for some time. However, the crossroads of visual processing power, data processing capabilities and compute power have suddenly made any mixed reality solution viable.

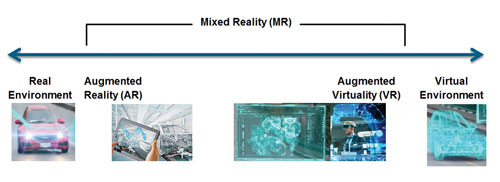

The reality-virtuality continuum. The notion of a reality-virtuality continuum was introduced by Paul Milgram, a professor of engineering at the University of Toronto, more than two decades ago. In a paper published in 1994,3 Milgram describes the mixed reality environment as the space between the real environment and the virtual environment, as described in FIGURE 1.

Figure 1. The reality-virtuality (RV) continuum.

In the manufacturing context, the far right of the spectrum describes what is often referred to as the digital twin of a factory, product and production, whereas the far left describes the actual factory floor with workers operating machines based on information on screens or other instruments.

Several use cases show the high impact augmented reality has on manufacturing. These use cases include assembly instructions and validation of complex assembly, asset maintenance instructions, expert remote instructions and support, programming and commissioning validation, and real-time quality processing and visualization.

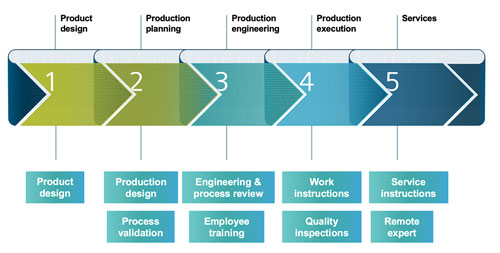

Most use cases in manufacturing today seem to focus on integrating AR at the actual production line, where it’s most feasible to link actual machinery, robots, parts, and 3-D graphic models. Some of these use cases include helping a shop floor worker identify the next part to assemble in the assembly sequence (FIGURE 2), validating a robotic program using an AR-generated part, instead of an actual part, and overlaying the 3-D model on top of the actual part for quality inspection purposes (as-planned vs. as-built scenario). When reviewing use-cases throughout the product lifecycle (FIGURE 3), it is clear the use cases relevant for AR technology are the ones further down the cycle, where the digital twin – the virtual model of the product – can interact with the real production line and related products.

Figure 2. Demo on an airplane nose (One Aviation) from the Hannover Messe trade show in 2018.

Figure 3. Siemens’ holistic product design-through-manufacturing value chain.

Model-based work instructions, leveraging the design and assembly process data within managed data structures, could easily clarify an assembly process for the shop-floor worker by overlaying the actual design data 3-D model on top of the actual, current assembly structure, thus shortening process cycle time and reducing human error. Such an AR model-based work instruction solution has another significant benefit: It resides within the automation which comes with model-based work instructions, as the CAD model and the process sequence are all byproducts of work done in earlier stages of the product lifecycle and can be leveraged. Such a solution should obviate the need to manually create and update documents as part of the work instruction authoring process. It also speeds up onboarding processes, as it makes the work instruction product more intuitive.

Another relevant use case that addresses production assembly planning validation includes an AR part precisely mounted on a fixture at the real production line to validate a welding operation program written directly to the robot controller, hence saving time and money on manufacturing a real prototype, which, in addition to its costs and manufacturing time, might not comply with common standards.

Training shop floor workers on assembly procedures is an AR use case that could accelerate new employees’ learning curves and save valuable time for experienced employees who currently must mentor the new ones. For quality issues and validation, AR can be used to validate part manufacturing and assembly structures in an as-planned vs. as-built scenario, where an employee compares the physical product and digital twin which overlays it for quality purposes.

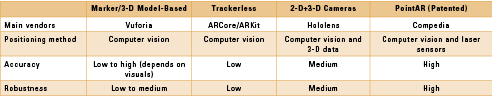

To address the use cases described above, a scalable, robust solution must be designed that includes accurate and reliable tracking. Tracking is how the augmented reality algorithm recognizes real-world objects, so it can accurately place virtual objects onto it. Most common commercial solutions that perform tracking today, such as ARCore by Google, ARKit by Apple and Vuforia’s eponymous system, utilize vision algorithms that inspect visual features in the incoming image stream of the actual object to determine the exact position to place the 3-D model. These solutions allow flexibility and simplicity during setup but are sensitive to environmental factors such as light variances and line of sight, and require clear, high-detailed features to the scanned object to gain high accuracy.

This article describes the design and implementation of a robust industrial augmented reality assembly instruction and validation solution in which various choices of AR and tracking methodology are reviewed to address challenges of environmental factors and the prerequisite for visual features in the environment. In addition, the solution “validates” that a manual operation was performed, which is key to ensuring a robust solution. Simply providing instructions is not enough for critical manufacturing needs. This solution is being tested in an actual production line for gas-insulated switchgear at the Siemens gas and power factory in Berlin, Germany.

Technology Overview

Key technologies. Current AR solutions are based on two main factors: the type of camera used and the analysis of visual features within the camera feed. The main demand of such AR solutions is they require an environment with sufficient differentiating visual features, defined as “trackers.” These visual features are identified by computer vision-based algorithms which calculate the camera’s location relative to the physical environment and enable augmenting virtual objects over the camera feed of the target physical object to generate the AR effect.

Table 1. AR Software Technology Comparison

As clear visual features are not always available in the physical environment, use of visual stickers, also known as “markers,” enrich the environment for optimal detection if placed accurately onsite next to the target object to track.

Other popular solutions in use today by the AR market include embedded 3-D cameras, which indicate depth between the camera and target object (for instance, as integrated in Microsoft’s HoloLens head-mounted wearable), which improves the tracking mechanism by adding a 3-D mapping layer. Another possible solution for accurate AR involves an array of cameras that track physical objects by means of attached visual trackers (usually IR reflective spheres), and then locate the position of these objects via computer vision algorithms that process these images as generated by the array of accurately calibrated cameras, in real time.

An important differentiation regarding AR implementations is the user interface perspective, as current AR developments are divided into two major categories: wearable, head-mounted devices and mobile devices. Some major OEMs (Microsoft, Magic Leap) are developing and customizing hardware devices that aspire to achieve transparency on the user’s mixed-reality experience. Others (Google, Apple) focus on their own software development kits, intended for developers creating the AR applications, and focus on the experience on mobile devices.

Challenges. One of the key challenges to developing an augmented reality solution for industrial applications is a majority of industrial environments (e.g., a fuselage of an airplane) lack clear visual features on all surfaces, limiting the use of AR to the regions where the visual features are clear and visible. Further, such solutions create a prerequisite for good lighting conditions in industrial environments, and many times are sensitive to variation between the actual part(s) and the 3-D representation in scale, color, etc.

Use of visual stickers as markers to enhance the visual features in an industrial environment requires excessive preparation work, is prone to user errors and inaccuracy, and is only a partial solution, as it is not always possible to get full coverage utilizing this technique. One of the key benefits to an integrated solution is to overcome data revision issues. Using visual stickers would not solve that problem.

Use of 3-D cameras to attempt to map an area and provide a better feed to an AR solution is also a partial solution, as there are use cases where the target environment is covered with uniform surfaces where it’s impossible to differentiate between surfaces based on depth information.

Using an array of cameras is also limited, as it is expensive, requires complicated calibration between devices, adds substantial infrastructure and hardware to the setup, and can create security and layout issues.

The reasons provided above are currently preventing commercial AR solutions based on visual features, markers, and high-end cameras from instantly becoming widely used by manufacturers around the world.

When referring to industrial shop-floor use cases, it’s also important to address the most-suitable hardware devices to use. Wearable devices that give the user an intuitive, hands-free experience also have their limitations. The most significant limitation is the reluctance of workers on the shop floor to put these devices on their heads for long periods of time, as required in production line use cases. They tend to also limit field-of-view, and the related orientation issues that have been identified by many tests further add health concerns.

There are many challenges to existing AR solutions, and a new approach is needed.

Solution Design

Analysis and technology decisions. After performing an in-depth feasibility study focusing on specific production, shop floor use cases, and AR implementations in manufacturing environments, it was concluded the limitations of existing AR solutions as described earlier have dictated the need for a new technology.

All those efforts eventually led to development of a new technology we call “PointAR.” We have identified a new generation of low-cost, low-energy laser lighthouses and related sensors developed in the virtual reality (VR) industry. However, we found no complementing off-the-shelf software. The reason is tracking on VR headsets does not require absolute accuracy but rather consistency and continuity. (Namely, it is not important if the absolute position is not accurate, provided the change in movement is consistent). On the contrary, a robust AR solution for manufacturing requires absolute accuracy as it relates with real objects in the physical environment. Further calculations and experiments demonstrated fusing computer vision algorithms for absolute accuracy-oriented algorithms can use the same VR Lighthouse hardware, while generating good results 7m from the lighthouse and 4m from the target object. This new technology enables an AR solution based on computer vision algorithms fused with laser lighthouses and inertia sensors that are reliable and cheap.

Solution architecture. As a prerequisite for a robust AR solution for manufacturing, the data source must consist of precise CAD data, 3-D models and metadata, which describe additional information on the given process. An enterprise data management solution to manage electronic CAD (ECAD), mechanical CAD (MCAD), process information, and metadata related to dependencies to process is recommended. This is typically performed at many manufacturers and technology companies by implementing a strong connection to the product lifecycle management (PLM) system. The PLM system may act as a tool to create, store, author, and configure the data that are later loaded in the AR solution.

The 3-D models materialize via CAD software and dedicated CAD libraries stored in the PLM database in the early product design and production planning phases. Additional metadata related to the production process are added in the production planning and engineering phases, before dedicated plugins output the data from the PLM to the AR solution. The PLM software acts as a configuration management system that ensures transparency between the latest datasets and the AR visualization software.

When designing an AR solution for manufacturing, consider the following components:

• A hardware component that reports the exact location of the camera (mandatory) and optionally other components in the environment;

• An interface mechanism that translates and transmits the hardware output to the software layer;

• A software layer that utilizes the location information to apply the AR layer on top of the camera feed, reads and parses the data package exported from the PLM, and loads the application layer with according user interface for the user.

The AR system described should be agnostic to the hardware component used to retrieve the location of the camera and other components in the environment. This provides flexibility for the manufacturer to acquire hardware that may be more relevant, cost-efficient and optimally performing for their manufacturing environment. Tracking is performed by connecting a tracker to the camera via dedicated mounting device so the camera’s movement is aligned with the tracker as one complete unit (FIGURE 4). Additional trackers can be connected to other physical objects in the environment to track their movement; for instance, a tracker can be placed on a fixture in the assembly station to track its movement and maintain the AR experience.

Figure 4. A PointAR unit equipped with IR-based sensors for tracking and a standard Logitech 4k web camera.

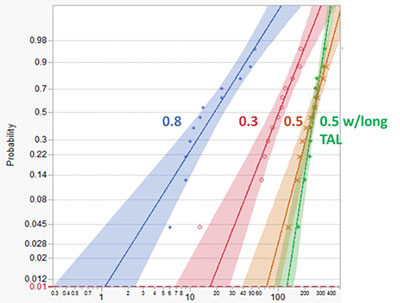

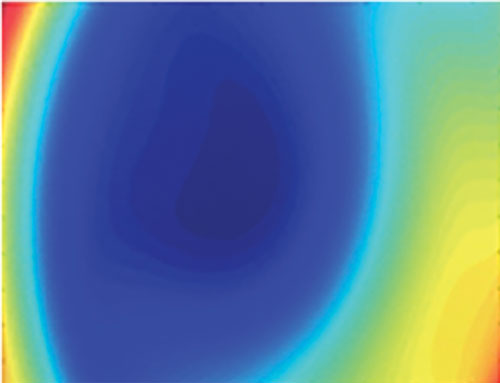

Figure 5. AR accuracy mapping. The image represents the system accuracy in different positions of the camera feed. Blue colors indicate the accuracy is better than 1mm (from distance of 2m of tracked object). Green and bright yellow colors indicate accuracies of 1 to 2mm (from 2m).

A unique calibration process is performed per each Point AR unit to precisely output the offset among the tracker, the camera and the connecting unit’s tip. This calibration process is essential to accurately overlay the 3-D model on top of the actual part, as part of an initial positioning method. On initial launch of the AR application, a simple positioning process needs to be performed where predefined points selected on the 3-D model are matched with a recording of points generated by the user who physically identified these points on the actual object via the PointAR unit’s end tip. This process aligns the coordinate system of the real world with the coordinate system of the 3-D model and permits free movement of the camera along with other, tracked objects in the environment, without damaging the AR tracking experience.

Figure 6. Precise AR image placement (CAD model emphasized in yellow) on complex assembly procedures.

Test methodology. The challenge during development and fortifying the AR solution was defining how to implement accurate AR in real time with low latency. For this, we developed proprietary GPU-based algorithms to ensure the required accuracy and performance can be achieved.

Accuracy was tested by supporting zoom and enabling the user to “move” the 3-D overlay image on the projection plan to reach full accuracy. The physical length in the real world of this “correction” movement can be calculated and then used to define the AR accuracy. In many use cases, we have achieved 1 to 2mm of AR accuracy in real time, while using low-priced sensors and a standard PC.

Results

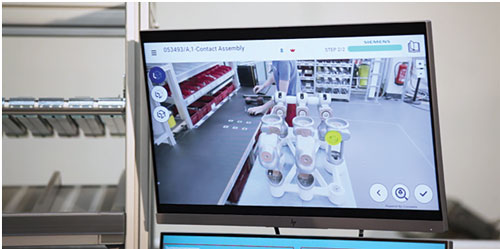

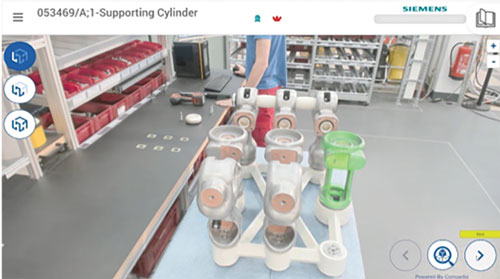

A proof-of-concept pilot of the Siemens PointAR solution has been launched at Siemens Gas and Power division. The project consisted of equipping three manual assembly stations with a full hardware and software solution for work instructions in the shop floor (FIGURE 7). The main motivation for such a solution came from the need of this newly built manufacturing line to quickly ramp up in production and accelerate the learning curve of the precise assembly process to new employees, without using experienced employees for supervision and guidance.

Figure 7. An AR-enhanced work instruction solution for an assembly station at the Siemens Gas and Power factory, gas-insulated switchgear manufacturing line in Berlin.

As the AR solution is agnostic to the specific sensors, we are now evaluating different sensors for different use cases and requirements. We are also looking for new use cases and identify new ones almost every week.

Future planned software updates will include a more fitted user experience, enabling alignment with suitable wearable devices as they evolve, connectivity with IoT devices on the shop floor such as Atlas Copco’s smart tools, and improved algorithms for supporting tracking accuracy.

From a business perspective, as the AR-enhanced work instructions solution is now officially part of the Siemens Industry Software portfolio (FIGURE 8), it is most important to validate other AR use-cases and leverage our developed infrastructure to provide accurate AR technology throughout various scenarios with good ROI within the shop floor.

Figure 8. The AssistAR AR-enhanced work instructions solution, shown at Realize Live in June 2019 in Detroit.

Summary

The article described AR for manufacturing use cases, challenges with current popular technologies and how we had to develop a new approach in order to implement a robust industrial augmented reality assembly instruction and validation solution. We reviewed various choices of tracking methodology and developed a unique hybrid computer vision and sensor-based solution to achieve an accurate image overlay. This new AR for manufacturing, PointAR, yielded a robust solution with 0.5 to 2mm absolute position accuracy and 0.05° to 0.2° of angular accuracy (depending on sensors, setting and range). The solution also overcame challenges of environmental factors, the prerequisite to have scanned objects in the environment, and is not affected by lighting or background. In addition, the solution is sufficiently accurate to “validate” a manual operation was performed, which is key to ensuring a robust solution. Simply providing instructions is not enough for critical manufacturing needs. We completed successful initial tests, and the AR solution continues to be tested in an actual production line, including at the Siemens Gas and Power factory in Berlin, with others to join.

References

1. Cognizant, “Building the Business Case for Augmented Reality,” Jun. 19, 2018, cognizant.com/perspectives/building-the-business-case-for-augmented-reality.

2. Bryan Griffen, “Augmented Reality and the Smart Factory,” Augmented Reality and the Smart Factory,” Apr. 12, 2019, manufacturing.net/article/2019/04/augmented-reality-and-smart-factory.

3. Shanhong Liu, “Global Augmented Reality Market Size 2025,” Statista, Aug. 17, 2018, statista.com/statistics/897587/world-augmented-reality-market-value.

4. P. Milgram, H. Takemura, A. Utsumi and F. Kishino, “Augmented Reality: A class of Displays on the Reality-Virtuality Continuum,” Telemanipulator and Telepresence Technologies, SPIE, 1995.

Ed.: This article was originally published in the SMTA International Proceedings and is printed here with permission of the authors.

is director of global services – digital manufacturing solutions at Siemens Industry Software (siemens.com); jay.gorajia@siemens.com. is product manager at Siemens Industry Software. is director, Innovation Projects and rapid development manager at Siemens Industry Software. is cofounder and CEO at Compedia (compedia.com).