BPA Sees Need for Speed Accelerating at Data Center Networks

SURREY, UK -- High-performance data center or hyper data center networks that require high-performance switches will drive significantly faster speeds, with the vast majority topping 100GbE by 2019, according to new research.

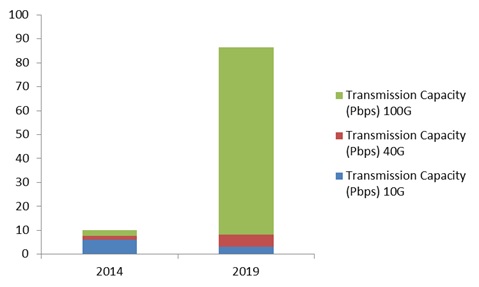

The high-performance networks increasingly mean interface speeds of at least 40GbE, and this year is seeing more 100GbE datalinks at the spine or core layer where traffic and application demands are highest, says BPA Consulting. By 2019, the firm adds, demand will be growing by an order of magnitude compared to 2014, with 100GbE becoming the dominant technology.

Demand for 100Gbps transceivers (in millions).

The complete results are part of the "BPA High Speed Report" available from the company.

What about the technologies? Using custom-built silicon with 40GbE or even 100GbE capacity as a multilayer spine switch for a large network can be a good investment, BPA says. On the other hand, at the access layer, where performance demands are less, having traditional switches based on merchant silicon or white boxes makes sense both commercially and operationally. Custom-built silicon switches can deliver significant value with improved performance, reliability, durability and capacity. Potentially such a technology choice will facilitate a longer life-cycle over many years.

In scenarios where high latency will have a significant detrimental impact such as financial services transactions, custom-built silicon has a significant advantage by eliminating the compromises between logic scale and physical scale, BPA concludes.

Press Releases

- Phononic Launches Wholly Owned Subsidiary in Thailand as APAC Headquarters

- AIM Solder’s Dillon Zhu to Present on Ultraminiature Soldering at SMTA China East

- Hentec/RPS Publishes an Essential Guide to Selective Soldering Processing Tech Paper

- ZESTRON Welcomes Whitlock Associates as New Addition to their Existing Rep Team in Florida